The Role of Advanced Mathematics in AGI: Could Abstract Theories Pave the Way?

Why the AI Industry Should Harness Category Theory.

Since the release of OpenAI's ChatGPT, large language models (LLMs) have seen significant advancements, prompting substantial investment into foundational model companies such as OpenAI, Anthropic in the US, and Mistral in Europe. Additionally, considerable capital has been directed towards vertical foundation model companies developing industry-specific models, exemplified by EvolutionaryScale's $142 million seed funding in the US and Bioptimus's $35 million in France.

The influx of capital has also led to scrutiny regarding the generative AI sector's ability to meet high expectations. In a post entitled "AI's $600B Question," Sequoia partner David Cahn discusses the increasing financial challenges within the AI industry. While GPU supply shortages have lessened and companies like OpenAI lead in revenue, the disparity between capital expenditure and revenue generation has grown, raising concerns about potential investment losses and market saturation. Despite these hurdles, Cahn seems optimistic about the sector's long-term value, particularly for companies that can provide tangible user benefits amid falling GPU costs and ongoing innovation.

Interestingly, prominent foundational model companies are also striving towards achieving the "holy grail" of technology: artificial general intelligence (AGI). However, the pathway to AGI remains a topic of significant debate and uncertainty within the scientific community. While large language models (LLMs) have demonstrated remarkable capabilities in various domains, it is still unclear whether they can evolve into true AGI. The complexity and multifaceted nature of AGI pose challenges that go beyond the current scope of LLMs, prompting ongoing research and exploration into alternative approaches and advanced methodologies.

Can more advanced mathematical techniques help?

While machine learning (ML) is already deeply rooted in mathematical principles, many experts believe that the path to AGI will require even more sophisticated mathematics than currently utilised in popular models. The reader might wonder, isn't ML already highly mathematical? The answer is yes, but the mathematics employed in today's popular ML algorithms, such as gradient descent and backpropagation, are not sufficient to achieve AGI. It is anticipated that new mathematical frameworks and theories will need to be developed to address the complex and nuanced challenges AGI poses. These include advanced forms of statistical learning, novel optimisation techniques, and novel approaches to handling uncertainty and decision-making at a level miming human cognitive processes. Indeed, the journey towards AGI is expected to push the boundaries of computing and application of advanced mathematics.

Put another way, the mathematics underlying today's popular ML algorithms, such as gradient descent, were developed centuries ago—quite old mathematics! In contrast, modern mathematics has evolved significantly, especially in the last fifty years. New mathematical theories have become so specialised that finding a mathematician proficient in more than one branch of mathematics is challenging. These advanced branches include; Algebraic Topology, and Category Theory. We at Zaiku Group are particularly excited about Category Theory's potential as the right framework for exploiting the pathway to AGI.

What is Category Theory?

Category Theory is a fascinating branch of mathematics that has its roots back in the late forties in Algebraic Topology through the seminal works of Samuel Eilenberg and Saunders Mac Lane. It emerged as a powerful framework to unify and generalise various mathematical concepts and structures.

The key basic concepts of the subject are:

Categories: A category consists of objects and morphisms (arrows) between those objects, adhering to specific rules about composition and identity.

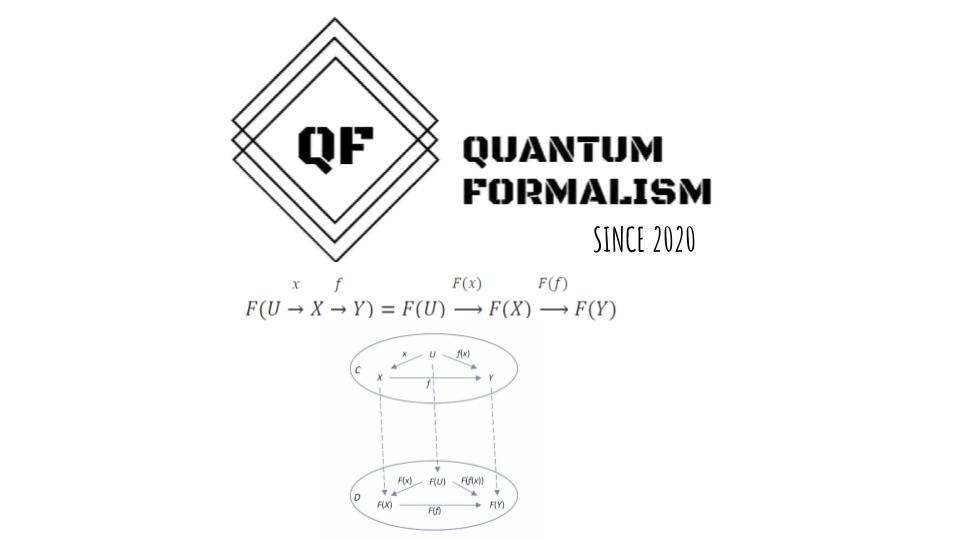

Functors: Functors are mappings between categories that preserve the structure of categories, i.e., they map objects to objects and morphisms to morphisms in a way that respects composition and identities.

Natural Transformations: Natural transformations provide a way of transforming one functor into another while respecting the structure of the categories involved.

If you have taken a linear algebra course at University, you are probably familiar with vector spaces. Vector spaces indeed form a category, denoted Vect. In this category, the objects are vector spaces, and the morphisms (arrows) between these objects are linear maps. Linear maps are functions that preserve the vector space structure, meaning they respect vector addition and scalar multiplication.

When dealing with finite-dimensional vector spaces, these linear maps can be represented by matrices. Hence, in the category Vect, composition of morphisms (linear maps) corresponds to matrix multiplication when dealing with finite-dimensional spaces. The identity morphism for each object (vector space) is represented by the identity matrix, which leaves any vector unchanged under multiplication.

This categorical perspective provides a unified framework to study and understand various properties and operations on vector spaces, offering deeper insights and powerful tools that extend beyond ordinary Linear Algebra into other fields such as Functional Analysis, and Group Theory.

Breaking Down Barriers to Category Theory for AGI

Unfortunately, due to its abstract nature, Category Theory has remained somewhat inaccessible to machine learning practitioners working on cutting-edge products that could significantly impact their industries. While popular online platforms such as Coursera offer self-paced and video-based courses such as the 'Mathematics for Machine Learning and Data Science Specialization,' they often avoid deeper mathematical subjects such as Category Theory to cater to a broader audience.

Furthermore, in these video-based courses, learners are left to passively consume information without the opportunity to ask questions in real-time or receive immediate clarification on complex topics. This lack of direct interaction can lead to misunderstandings and gaps in knowledge.

To eliminate the barriers to advanced mathematics, our virtual community, Quantum Formalism (QF), has embraced a new mission: to transform every data scientist, AI researcher, and software engineer in emerging technologies into proficient mathematicians. By empowering them with the necessary mathematical knowledge and skills, we aim to enable these professionals to excel in their industries and push the boundaries of innovation.

You can read more about the QF's journey via our recent Substack post 'Quantum Formalism: The Story Behind'.

If you know any ambitious researchers or software engineers who aspire to master advanced branches of mathematics but feel intimidated, please do let them know about quantumformalism.com. We’ve even created tools such as 'Advanced Mathematics GPT' to help ease the abstraction learning process. This custom GPT blends formal and informal language to provide clear explanations, making abstract mathematical concepts more accessible and less daunting. Of course, it occasionally produces hallucinations, as is typical with current LLMs! 😉

Thank you for taking the time to read this extended newsletter.

Happy weekend!

Many thanks,

Bambordé

Co-Founder & Head of Mathematical Sciences