The Role of Advanced Maths in AI Safety & Governance: Insights from Nuclear Arms Control.

AI Safety & Governance.

Dear friends,

As you may recall, we recently discussed Category Theory's potential in shaping AGI through our post "The Role of Advanced Mathematics in AGI: Could Abstract Theories Pave the Way?" Continuing on that theme, it's worth noting that back in 1962, a novel proposal by Mathematician F. William Lawvere, later expanded upon by Michèle Giry, tried to use Categories to create a framework for arms control verification during the Cold War at the height of existential risks that nuclear weapons posed to humanity.

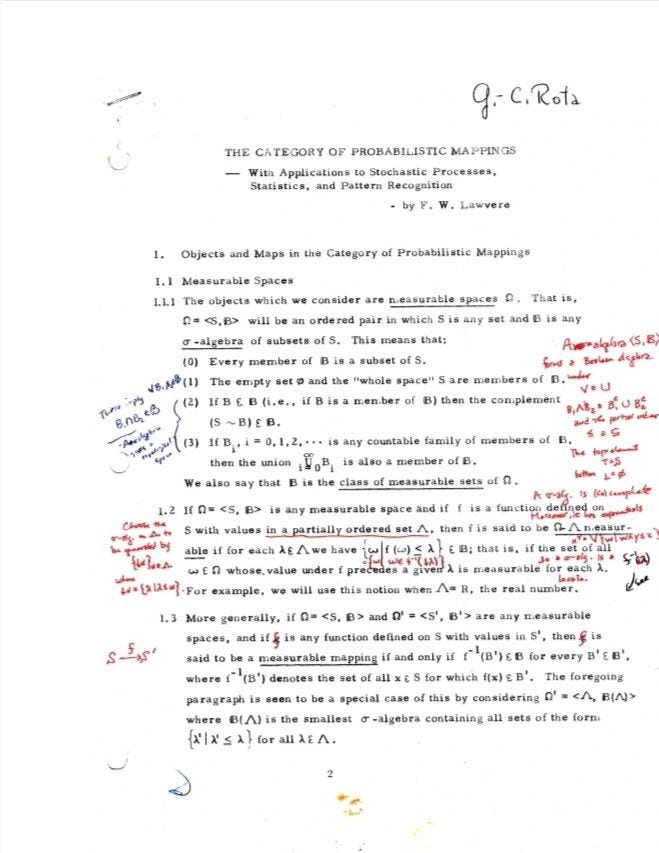

Their work, primarily through the lens of the 'Giry Monad' and its underlying adjunction, provided a novel framework for verifying compliance with nuclear treaties. This framework, rooted in mathematical rigour, was designed to manage the enormous risks associated with nuclear weapons. Below is a scanned copy of the original work by the renowned mathematician Gian-Carlo Rota:

Note: A scanned copy of Lawvere's appendix, which was not released to the public until 2012, played a crucial role in the development of categorical probability. Michèle Giry, along with French intelligence, obtained this document, and from it, Giry significantly advanced the field. The appendix originally served as a framework for arms control verification, highlighting the foundational connection between category theory and probabilistic.

Inspiration for AI Governance & Safety

In today's context, as AI systems grow increasingly powerful, these abstract mathematical principles could offer a compelling blueprint for AI safety & governance. The adjunction between probability and strategy in the Giry Monad, which later influenced key constructions in Category Theory, could serve as a sophisticated model for understanding and mitigating the risks associated with AI. Adopting such ideas could enable us to develop protocols for AI that ensure safety, fairness, and accountability—paralleling the deliberate approaches used in nuclear arms control.

Just as precise verification protocols were crucial for maintaining global security during the nuclear arms race, AI safety and governance could benefit from similarly rigorous frameworks. The historical precedent set by Lawvere's work offers a valuable roadmap for navigating the challenges of the emerging AI age as we move towards Artificial General Intelligence (AGI).

Thank you for reading our newsletter. I wish you a fantastic weekend ahead!

Many thanks,

Bambordé

I invite you to join my personal Substack (link below)! By subscribing, you’ll gain access to a range of topics that I will start posting about later this month beyond what I cover here, including the latest intersections of politics and technology, thought-provoking insights, and new perspectives that you may find interesting.